We've archived the results of the first F{ai}R & Hackathon at #siliconlovefield, and moved the server to a more permanent URL. All the links and resources are still available here. Thanks everyone for coming, and stay tuned for future events!

Vibe(ish) coding with nvim, zed, tabby and opencode. Wondering who::else("here", wishes$) to work on a Swiss model fine-tuned on hackathon code? ✨🇨🇭🌾🌞

- ⚙️ Developer/Tech

Freelance coder, open data activist, working for decades on improving Internet platforms and building an inclusive tech community events. Also a blogger and 8-bit artist who has been involved in teaching and social impact projects, coworking spaces, digital sustainability conferences, and never too many hackathons.

🌍 Bern

🧠 Debugging & dehallucinating

🔍 From AI to ROI in 24 hours

🎯 Eye-candy, Open Data, Ethics

Experience

Dribs

Using Runpod you can quickly start a machine - use this link to get credits - I recommend the PyTorch 2.4.0 template with RTX 5090 with 15 VPUs as your project seems quite CPU bound, and not just GPU.

Some tips from our discussion this morning:

Using OpenRouteService with guidance from Apertus. The API connections are working and we are making progress. One of us is being slightly distracted by 3D robot vision 🤖

Using OpenRouteService with guidance from Apertus. The API connections are working and we are making progress. One of us is being slightly distracted by 3D robot vision 🤖

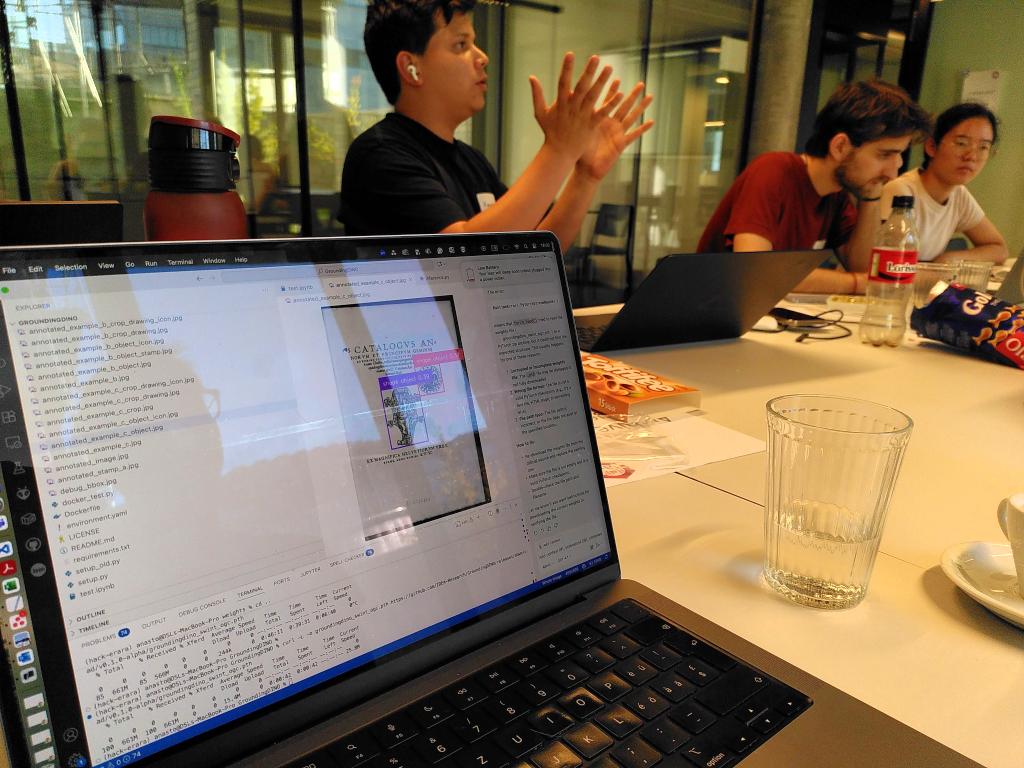

The team is on its way with a solution approach to mapping similarity between types of content. There are useful convolution networks, and we could generate embeddings on the basis of a database. Then we would filter pictures using embeddings closest to a query. Using a vision transformer combined with text embedding, were able to detect the shapes, and we are working on a combined solution.

The team is on its way with a solution approach to mapping similarity between types of content. There are useful convolution networks, and we could generate embeddings on the basis of a database. Then we would filter pictures using embeddings closest to a query. Using a vision transformer combined with text embedding, were able to detect the shapes, and we are working on a combined solution.

The team has been processing orthophotos and satellite data, to try to detect solar panels. There is more literature available, and we expect clearer results. Manual classification of polygons vs. georeferenced points are what two subgroups are working.

We had three business-interested and two IT experts, trying to clarify the use case. Planning to develop an e-learning module on developing guardrails for a multimodal system. I'd suggest to create a screencast inspired by the TextCortex YouTube channel to explain LLM Guardrails (OpenAI) on a general level. This will help to interest developers in implementing a solution. But I would really also like to see the product in action from a user perspective in this project first.

After a few hours of work we have managed to collect hardware power metrics of a testbed server in the Begasoft cloud. We need to make another script, because we currently only can read the average energy use. Still struggling with getting Apertus to work on a local NVIDIA machine, kinda wish we had a Mac ;-) j/k

Prof. Marcel Gygli recommends https://lmarena.ai to find out which model is best for your use case

Prof. Marcel Gygli recommends https://lmarena.ai to find out which model is best for your use case

I've shared some early impressions and conversations with Apertus in my blog: https://log.alets.ch/107/ There is also a new post up with coverage of the Swiss {ai} Weeks in Bern.

The upgrade of the Swiss {ai} Weeks org will run today around 15h30 CET. All users who join the hackathon will benefit from:

🔥 Spaces to build ML applications

🚀 Inference Endpoints for secure production APIs

👐 Supported Inference Providers and HF Inference API

💰 USD 10 credit on your account

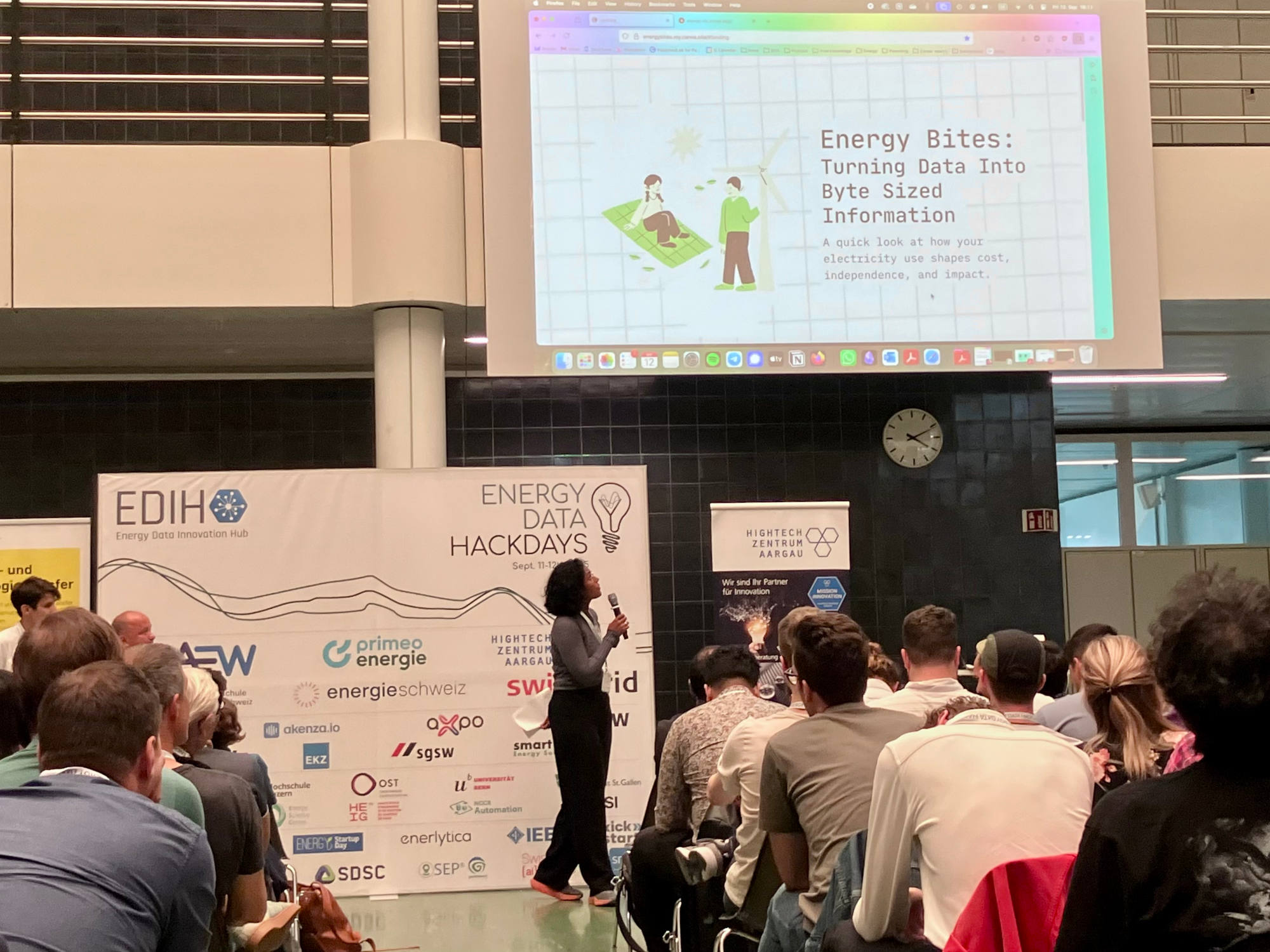

Greetings from the Energy Data Hackdays, first of the Swiss {ai} Weeks hackathons. Try the 💡 Energy Bites demo here

Greetings from the Energy Data Hackdays, first of the Swiss {ai} Weeks hackathons. Try the 💡 Energy Bites demo here

I've opened a PR on the Language Technology Assessment database to get started here.

Updated with the latest data and benchmarks, thanks again to Michael J. Baumann (effektiv.ch) for making this easy.

I've been working on a simple and easy to replicate Hugging Face space for working with Apertus. Perfect for hackathons! Try it at https://huggingface.co/spaces/loleg/fastapi-apertus Source code / send bugs to https://codeberg.org/loleg/fastapi-apertus

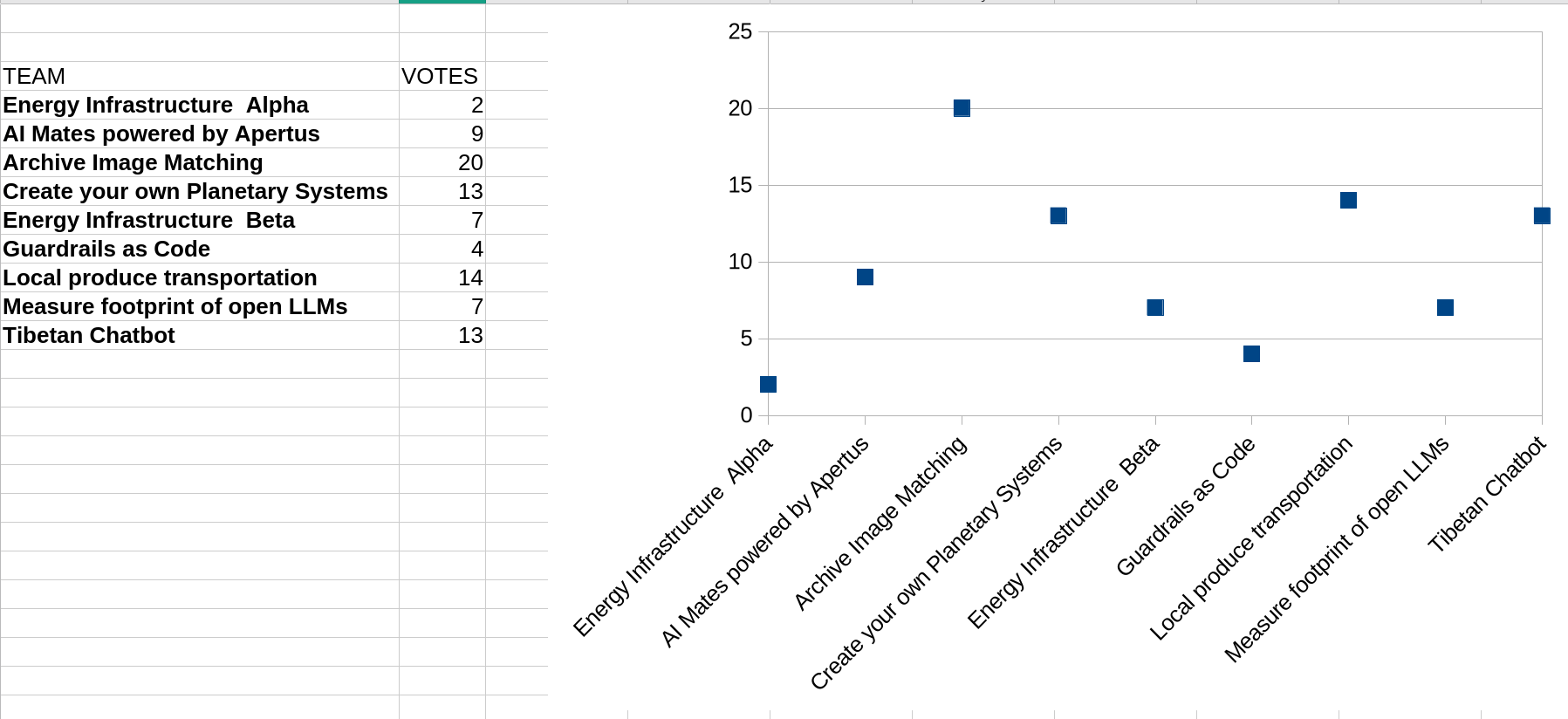

Results of our public vote - congratulations to all the teams!

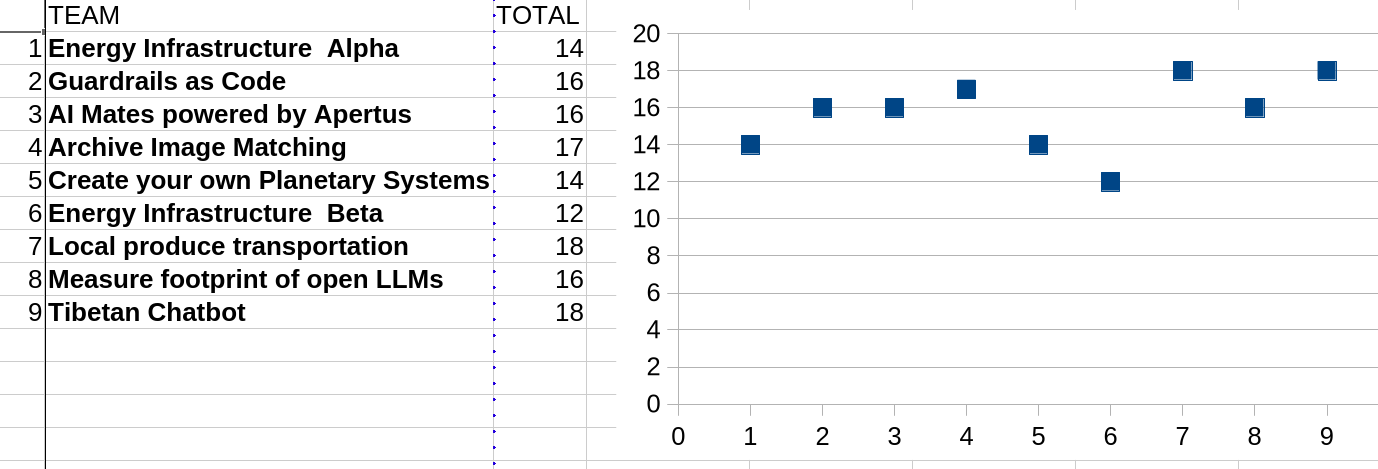

Results of our public vote - congratulations to all the teams! Results of the AI voting, powered by Dribdat, Apertus 70B &

Results of the AI voting, powered by Dribdat, Apertus 70B &  Screenshot from

Screenshot from

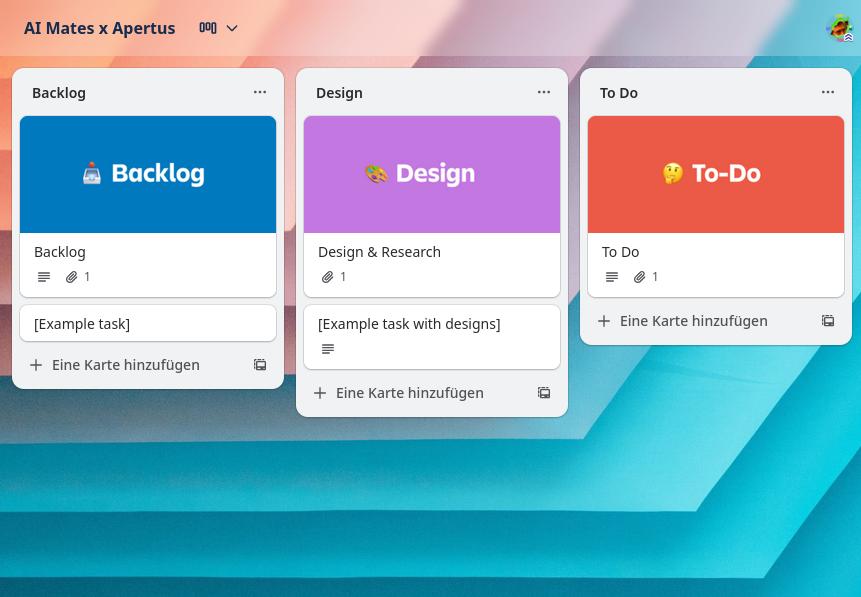

Setting up a Kanban board on Trello

Setting up a Kanban board on Trello