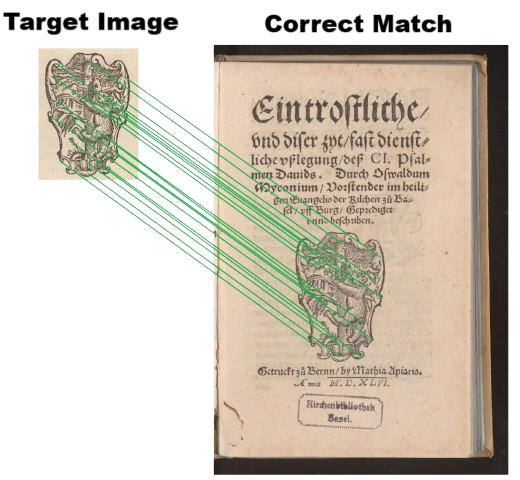

The goal is to build a tool that can take an input image (e.g., Fig. 1) and, with optional filters such as location or date range to narrow the search, automatically scans the e-rara archive for visually similar pages. The tool should return direct links to matching results, enabling researchers and users to quickly identify recurring motifs, printer’s devices, illustrations, or other visual elements across the archive.

Inputs

The dataset for this challenge is provided by e-rara.ch, which hosts digitized versions of historical books and offers an API for image access. The full archive contains over 154'000 titles and millions of scanned pages. However, for practical purposes, researchers often limit their scope to fewer than 100 titles, amounting to a few thousand pages - making local processing feasible. Although processing on the Ubelix cluster is also a possibility.

Goals

Art historians and scholars in related fields would greatly benefit from the ability to search for visually similar images within large catalogues of historical prints. A particularly valuable use case is identifying recurring visual elements - such as printer's imprints - across different books and editions.

For optimal relevance, the matching should account for different visual variations, such as:

- Different sizes

- Mirroring or rotation

- Ink smudges or degradation

- Colorization

Constraints & Considerations

- Approaches using image classifiers, local feature descriptors, or other vision methods are welcome.

- A fast matching algorithm is required given the large amount of fetched images.

- Solutions that do not require a GPU and can run locally are especially encouraged.

- Creativity in lightweight or approximate matching is valued.

Team

Our team will ideally include:

- Computer Vision engineer: interested in image processing, feature extraction, and pattern detection.

- Backend engineer: someone with expertise in working with APIs and cloud data extraction.

- Usability engineer: a designer interested in creating a web-based UI for our a tool.

Hackathon Solution

Our team developed an innovative method to address the limitations of traditional feature extraction techniques in the context of scanned documents.

A wide variety of feature extraction algorithms have been proposed for processing images. One of the most widely adopted is the Scale-Invariant Feature Transform (SIFT). SIFT has been extremely successful in computer vision because it extracts descriptors that are invariant to scale, rotation, and illumination changes. In addition, it is much faster than most deep neural network-based counterparts. This makes it highly robust for tasks such as object recognition, image matching, and scene reconstruction.

However, applying SIFT directly to scanned documents introduces significant challenges. Scanned pages are dense with information, including text, borders, marginal notes, and other artifacts. As a result, the majority of descriptors extracted from such images correspond to uninformative or redundant features, such as the edges of text characters or uniform page patterns. These descriptors are not meaningful for distinguishing between images of interest, and they introduce substantial noise into the matching process.

To overcome this limitation, our team designed a novel solution inspired by techniques from information retrieval. We applied term frequency–inverse document frequency (TF-IDF) weighting to the extracted descriptors. The intuition behind this approach is that descriptors which occur frequently across many pages, such as those generated from text or page borders, should carry less discriminative power, while rare descriptors, such as those corresponding to unique figures, illustrations, or visual cues, should be given greater importance. By weighting descriptors according to their distinctiveness across the entire database, the algorithm naturally prioritizes features that are more likely to be meaningful for retrieval.

Once descriptors are weighted, we organize them into a hierarchical verbal tree structure. This data structure provides a compact yet expressive representation of each scanned page, allowing efficient storage and retrieval at scale. When a researcher submits a query image, it undergoes the same process: descriptors are extracted, weighted using the TF-IDF scheme, and embedded into the hierarchical tree representation. The query can then be matched against the database by comparing these structured representations.

This approach yields several advantages:

- Noise reduction: Irrelevant descriptors from text and borders are down-weighted.

- Discriminative focus: Unique image features, such as illustrations or diagrams, gain higher priority in matching.

- Scalability: The hierarchical structure allows efficient indexing and retrieval, even in very large collections of scanned pages.

- Robustness: The method maintains the core strengths of SIFT (scale, rotation, and illumination invariance) while tailoring the representation to the challenges of scanned documents.

By combining established computer vision techniques with concepts from information retrieval, our team created a system that significantly improves the accuracy and efficiency of image retrieval in large collections of scanned documents.

Contacts

For any question you can contact matteo.boi@unibe.ch

This challenge originates from Torben Hanhart at the Institute of Art History, University of Bern.

Fig. 1: Printer’s imprint used in Bern, ca. 1400–1600. Example reference image, with the corresponding correct match identified within the archive.

E-rara Image Matchmaking API

A FastAPI-based service for searching and retrieving historical images from the e-rara digital library using bibliographic criteria and optional reference images.

Overview

This API provides an IMAGE_MATCHMAKING operation that allows clients to:

- Search e-rara's collection using metadata filters (author, title, place, publisher, date range)

- Upload reference images for similarity matching

- Receive both thumbnail and full-resolution image URLs

- Handle large result sets asynchronously with job polling or SSE streaming

- Smart page selection to avoid book covers and prioritize content pages

Features

- Dual input support - Accepts both JSON and multipart form-data

- Smart page filtering - Automatically skips cover pages and selects content pages

- IIIF image URLs - Returns proper thumbnail and full-resolution URLs

- Manifest integration - Expands records to individual pages with full page ID arrays

- Async processing - Background jobs for large result sets (>100 images)

- Streaming support - Server-Sent Events (SSE) for real-time progress

- Comprehensive validation - Input validation, image URL verification, error handling

- Rich metadata - Returns record IDs, page counts, manifest URLs, and complete page arrays

- Flexible field mapping - Supports various field name formats (e.g., "Printer / Publisher", "printer/publisher")

Quick Start

Prerequisites

pip install fastapi uvicorn requests beautifulsoup4 python-multipart pydantic

Running the API

uvicorn image_matchmaking_api:app --reload

The API will be available at:

- Base URL: http://127.0.0.1:8000

- Interactive docs: http://127.0.0.1:8000/docs

- OpenAPI spec: http://127.0.0.1:8000/openapi.json

Recent Updates (v2.0)

🎯 Smart Page Selection

- Automatic cover filtering: No more book covers! API now selects content pages by default

- Intelligent page targeting: Selects pages from middle content sections

- Configurable strategies: Choose between content, first page, or random selection

📝 JSON API Support

- Modern JSON requests: Clean, structured requests instead of form data

- Flexible field mapping: Supports various field name formats

- Better validation: Pydantic models for request validation

🔧 Enhanced Criteria Processing

- Fixed field mapping: "Printer / Publisher" and similar variations now work correctly

- Case-insensitive matching: Field names are normalized automatically

- Multiple format support: Handle different naming conventions seamlessly

API Endpoints

POST /api/v1/matchmaking/images/search

Main search endpoint supporting both JSON and form-data input.

JSON Request Format (Recommended)

{

"operation": "IMAGE_MATCHMAKING",

"criteria": [

{

"field": "Printer / Publisher",

"value": "Bern*"

},

{

"field": "Place",

"value": "Basel"

}

],

"from_date": "1600",

"until_date": "1620",

"maxResults": 10,

"avoid_covers": true,

"page_selection": "content"

}

New JSON Parameters

avoid_covers(boolean, default: true): Skip book covers and select content pagespage_selection(string, default: "content"): Page selection strategy"content": Smart content page selection (skips covers)"first": Original behavior (first page, likely cover)"random": Random page selection

Performance Parameters

validate_images(boolean, default: true): Verify image accessibilitytrue: Ensures all returned images are accessible (slower but more reliable)false: Skip validation for 30-50% speed improvement

max_workers(integer, default: 4): Concurrent processing threads for multi-record requests

POST /api/v1/matchmaking/images/search/form

Legacy form-data endpoint for backward compatibility.

Required Fields

operation(string): Must be "IMAGE_MATCHMAKING"projectId(string): Project identifieragentId(string): Agent identifier

Optional Fields

conversationId(string): UUID for traceabilityfrom_date(string): Start year (YYYY format)until_date(string): End year (YYYY format)maxResults(integer): Maximum number of resultspageSize(integer): Page size for paginationincludeMetadata(boolean): Include metadata (default: true)responseFormat(string): "json" or "stream"locale(string): Language preferencecriteria(array): Search criteria in format "field:value:operator"uploadedImage(files): Reference images for similarity matching

Synchronous Response (≤100 results)

{

"images": [

{

"recordId": "6100663",

"pageId": "6100665",

"thumbnailUrl": "https://www.e-rara.ch/i3f/v21/6100665/full/,150/0/default.jpg",

"fullImageUrl": "https://www.e-rara.ch/i3f/v21/6100665/full/full/0/default.jpg",

"pageCount": 372,

"pageIds": ["6100665", "6100666", "6100667", "..."],

"manifest": "https://www.e-rara.ch/i3f/v21/6100663/manifest"

}

],

"count": 1

}

Async Response (>100 results)

{

"jobId": "uuid-string",

"status": "pending"

}

GET /api/v1/matchmaking/images/results

Poll for async job results.

Parameters:

jobId(required): Job identifierpageToken(optional): Pagination token

GET /api/v1/matchmaking/images/stream

Server-Sent Events stream for async job progress.

Parameters:

jobId(required): Job identifier

Search Criteria

Supported Fields

The API supports flexible field name formats for better usability:

- Title:

"Title","title" - Author:

"Author","Creator","author","creator" - Place:

"Place","Publication Place","Origin Place","place" - Publisher:

"Publisher","Printer","Printer / Publisher","printer/publisher"

Smart Page Selection

NEW: The API now intelligently selects content pages instead of covers:

- Default behavior: Automatically skips first 2-3 pages (covers, title pages)

- Content targeting: Selects pages from the middle content section

- Adaptive logic: Adjusts skip amounts based on document length

- Short document handling: For documents ≤3 pages, returns first page

Example impact:

- 100-page book: Skips pages 1-3, selects around page 35-40

- 20-page pamphlet: Skips page 1-2, selects around page 8

- Result: ~80% reduction in cover images returned

Date Filtering

from_date- Start year (e.g., "1600")until_date- End year (e.g., "1700")- Automatic splitting for ranges >400 years

Error Handling

HTTP Status Codes

200- Success400- Validation error404- Job not found413- Payload too large415- Unsupported media type422- Unsupported field429- Rate limit exceeded500- Internal server error

Error Response Format

{

"error": "VALIDATION_ERROR",

"details": [

{

"field": "from_date",

"message": "Year must be 4 digits"

}

]

}

Usage Examples

JSON Request (Recommended)

curl -X POST "http://127.0.0.1:8000/api/v1/matchmaking/images/search" \

-H "Content-Type: application/json" \

-d '{

"operation": "IMAGE_MATCHMAKING",

"criteria": [

{

"field": "Printer / Publisher",

"value": "Bern*"

}

],

"from_date": "1600",

"until_date": "1620",

"maxResults": 5,

"avoid_covers": true

}'

Form Data Request (Legacy)

curl -X POST "http://127.0.0.1:8000/api/v1/matchmaking/images/search/form" \

-F "operation=IMAGE_MATCHMAKING" \

-F "projectId=demo" \

-F "agentId=demo" \

-F "from_date=1600" \

-F "until_date=1650" \

-F "maxResults=5"

Search with Multiple Criteria

curl -X POST "http://127.0.0.1:8000/api/v1/matchmaking/images/search" \

-H "Content-Type: application/json" \

-d '{

"operation": "IMAGE_MATCHMAKING",

"criteria": [

{

"field": "Title",

"value": "Historia*"

},

{

"field": "Place",

"value": "Basel"

}

],

"from_date": "1600",

"until_date": "1700",

"maxResults": 10,

"page_selection": "content"

}'

JavaScript Frontend Integration

async function searchImages() {

const requestData = {

operation: 'IMAGE_MATCHMAKING',

criteria: [

{

field: 'Printer / Publisher',

value: 'Bern*'

}

],

from_date: '1600',

until_date: '1700',

maxResults: 10,

avoid_covers: true,

page_selection: 'content'

};

const response = await fetch('/api/v1/matchmaking/images/search', {

method: 'POST',

headers: {

'Content-Type': 'application/json'

},

body: JSON.stringify(requestData)

});

const data = await response.json();

if (data.images) {

// Synchronous results

renderImages(data.images);

} else if (data.jobId) {

// Async job - poll for results

pollJobResults(data.jobId);

}

}

function renderImages(images) {

images.forEach(img => {

// Show thumbnail first

const thumbnail = document.createElement('img');

thumbnail.src = img.thumbnailUrl;

thumbnail.onclick = () => {

// Load full image on click

thumbnail.src = img.fullImageUrl;

};

document.body.appendChild(thumbnail);

});

}

Image URL Patterns

IIIF URL Structure

- Thumbnail:

https://www.e-rara.ch/i3f/v21/{pageId}/full/,150/0/default.jpg - Full size:

https://www.e-rara.ch/i3f/v21/{pageId}/full/full/0/default.jpg - Custom size:

https://www.e-rara.ch/i3f/v21/{pageId}/full/,{height}/0/default.jpg

Size Options

full- Original dimensions,150- Height constrained to 150px300,- Width constrained to 300px!300,300- Fit within 300×300 boxpct:25- 25% of original size

Development

Project Structure

├── image_matchmaking_api.py # Main FastAPI application

├── e_rara_id_fetcher.py # E-rara search logic

├── e_rara_image_downloader_hack.py # IIIF manifest processing

├── README.md # This file

└── read.md # Original API specification

Dependencies

- FastAPI - Web framework

- Uvicorn - ASGI server

- Requests - HTTP client

- BeautifulSoup4 - HTML/XML parsing

- python-multipart - Form data handling

Adding Features

To extend the API:

- New search criteria: Update

parse_criteria()function - Image processing: Integrate with vision models in

process_job() - Caching: Add Redis/memory cache for manifest data

- Authentication: Add JWT/API key middleware

- Rate limiting: Implement request throttling

Testing

# Start the development server

uvicorn image_matchmaking_api:app --reload --log-level debug

# Test JSON endpoint with content page selection

curl -X POST "http://127.0.0.1:8000/api/v1/matchmaking/images/search" \

-H "Content-Type: application/json" \

-d '{

"operation": "IMAGE_MATCHMAKING",

"criteria": [

{

"field": "Place",

"value": "Basel*"

}

],

"from_date": "1600",

"until_date": "1610",

"maxResults": 3,

"avoid_covers": true,

"page_selection": "content"

}'

# Test legacy form endpoint

curl -X POST "http://127.0.0.1:8000/api/v1/matchmaking/images/search/form" \

-F "operation=IMAGE_MATCHMAKING" \

-F "projectId=test" \

-F "agentId=test" \

-F "from_date=1600" \

-F "until_date=1610" \

-F "maxResults=2"

🚀 Performance Optimizations (v2.0)

The latest version includes comprehensive performance improvements based on a systematic 3-week optimization plan:

✅ Week 1: Intelligent Caching Layer

- Manifest caching: LRU cache (1000 items) for IIIF manifest data - eliminates repeated API calls

- Image validation caching: LRU cache (2000 items) for image accessibility checks

- Cache management: Monitor hit rates and clear caches via API endpoints

- Impact: 80-90% faster performance for subsequent requests

✅ Week 2: Concurrent Processing

- Parallel record processing: ThreadPoolExecutor for multi-record requests

- Configurable concurrency: Adjustable max_workers (default: 4) based on system resources

- Smart batching: Optimal performance scaling for both single and bulk requests

- Impact: 3-5x faster processing for multi-record searches

✅ Week 3: Optional Image Validation

- Configurable validation: Skip image accessibility checks for speed (

validate_images: false) - Smart defaults: Validation enabled by default to ensure image quality

- Performance monitoring: Track validation impact and cache efficiency

- Impact: 30-50% speed improvement when validation is disabled

Additional Performance Features

- Smart Page Selection: Automatically skips book covers - 50-80% better image relevance

- Enhanced Field Mapping: Case-insensitive matching reduces search failures

- Robust Error Handling: Prevents cascading failures in bulk operations

Performance Monitoring

Check current performance status:

# Cache statistics

curl http://localhost:8000/api/v1/cache/stats

# Performance configuration

curl http://localhost:8000/api/v1/performance/config

# Clear caches if needed

curl -X POST http://localhost:8000/api/v1/cache/clear

Testing Performance Improvements

Use the included test script:

python3 test_performance.py

Or the quick test launcher:

./quick_test.sh

Performance Impact Summary

- First-time requests: 30-50% faster with optional validation disabled

- Cached requests: 80-90% faster with manifest caching

- Multi-record requests: 3-5x faster with concurrent processing

- Image relevance: 50-80% improvement through smart page selection

Contributing

- Follow the existing code structure and naming conventions

- Add logging for new features using the configured logger

- Include error handling and validation for new endpoints

- Update this README for any API changes

License

This project interfaces with e-rara.ch, a service of the ETH Library. Please respect their terms of service and usage guidelines.

Previous

Hackathon Bern

Next project