Evaluating the project based on given criteria:

Technical Functionality (3.0)

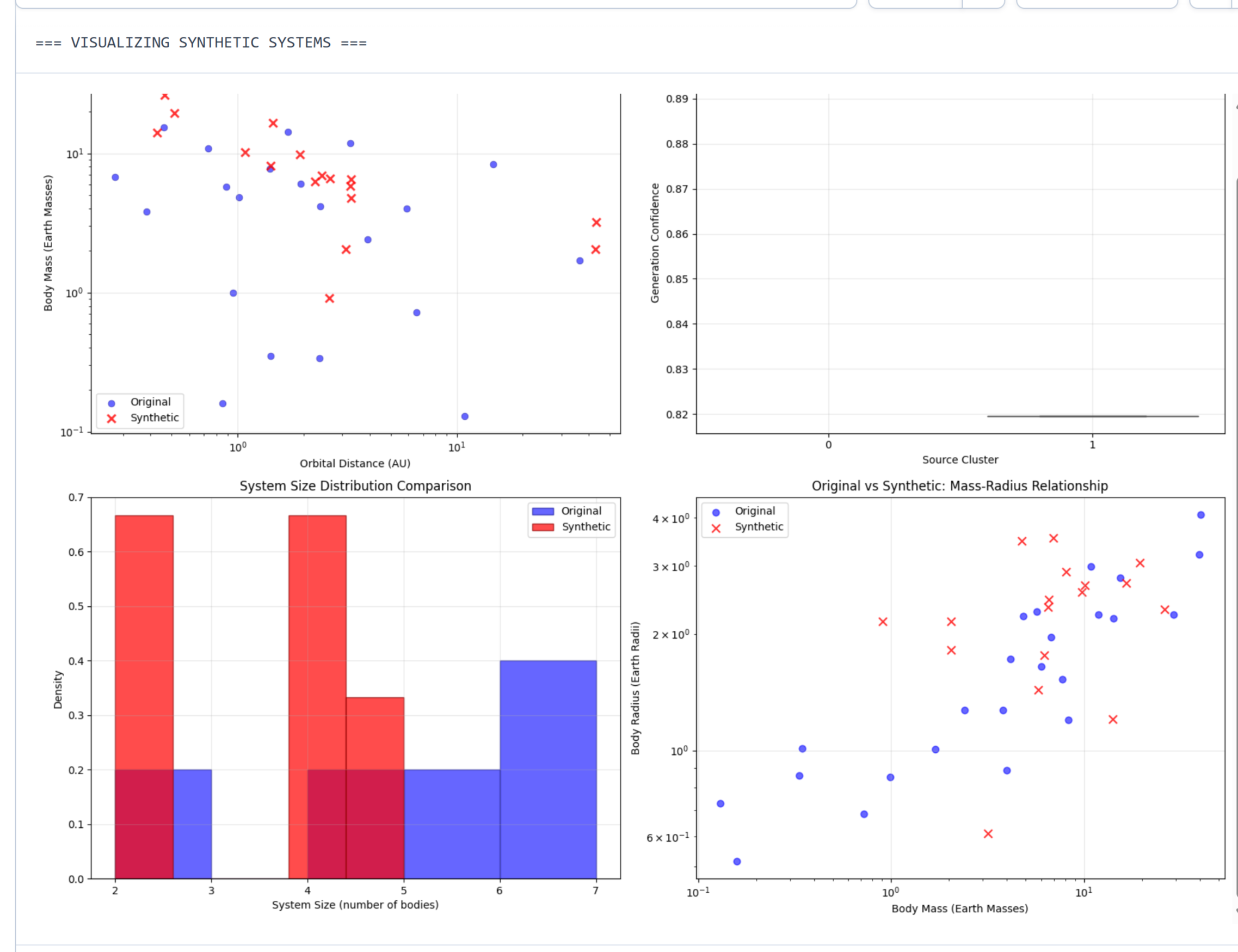

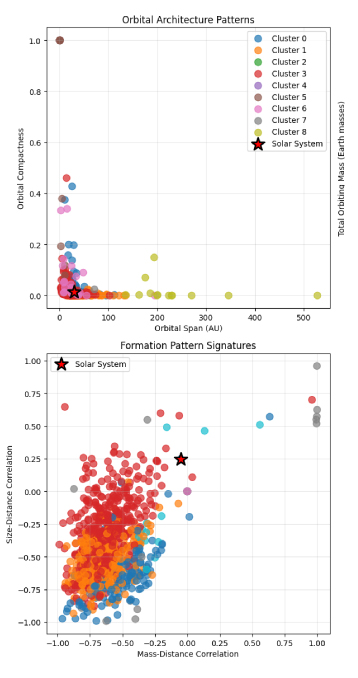

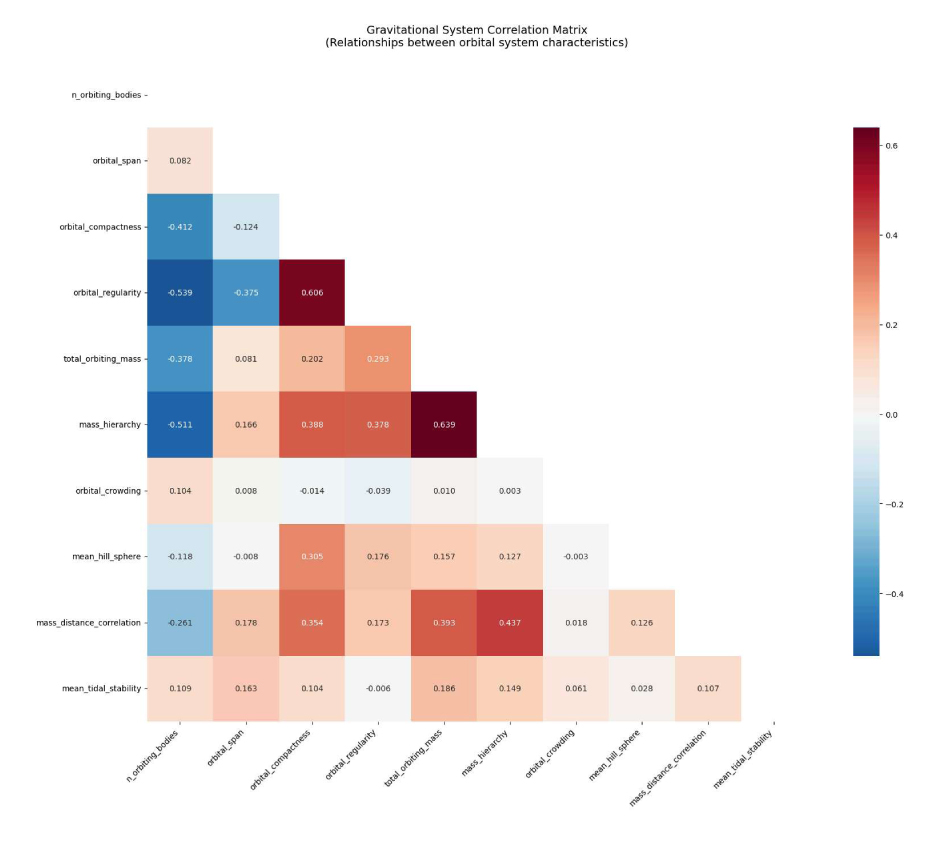

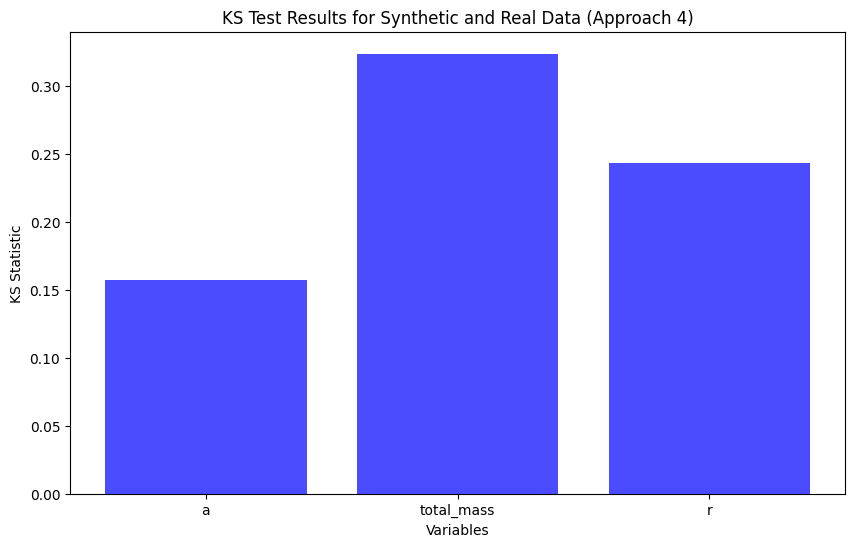

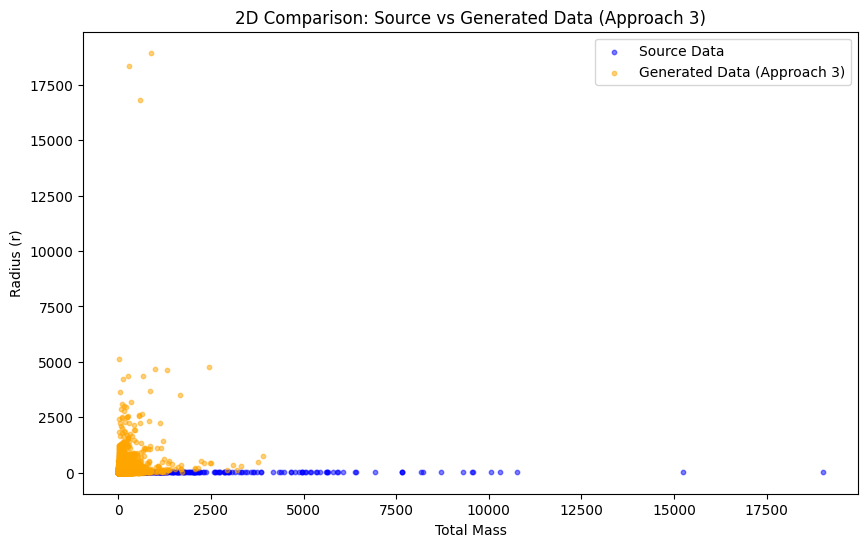

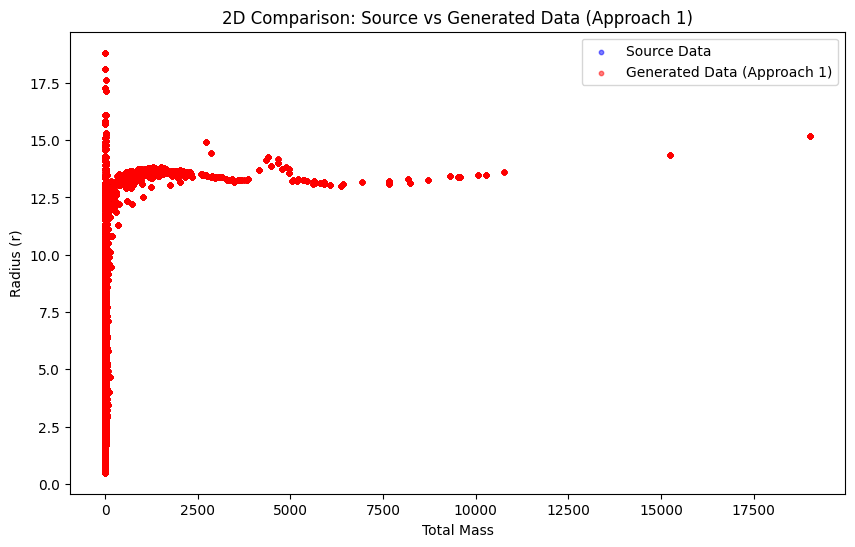

While the documentation indicates a clear understanding of the problem space and the ambition to employ AI, actual technical implementation details are sparse. The project seems to remain largely conceptual with fewer outputs than inputs, indicating limited functionality. Despite the ambition to use AI for complex system modeling, the progress shown through images and link references is more indicative of a proof-of-concept or research idea stage rather than a functional algorithm. As such, it is important to note that while they have demonstrated some aspects of technical understanding, there is a lot of ground to cover to achieve production-level functionality.

User Experience (2.0)

The project documentation lacks a user interface or an interactive demonstration to facilitate user experience evaluation. Given the absence of functional implementation and lack of interactive elements, it is difficult to gauge user experience directly. The links to external resources and images, while informative, do not substitute for a firsthand experience or demonstrate direct application of the concept in a user-friendly context.

Skillful use of AI (3.0)

The project's ambition to deploy AI for creating synthetic planetary systems suggests a clear understanding of AI's potential in complex modeling scenarios. However, since we're evaluating based on implemented functionality, this assessment is largely theoretical or speculative at this stage. The project effectively establishes a clear need for AI but doesn’t delve deeply into specific, realized AI applications within the project scope provided. This category likely represents future potential rather than current achievement.

Uniqueness / Creativity / Fun Factor (3.0)

The aim to create a large number of unique synthetic planetary systems using AI to guide research is creative. Yet, the novelty and surprise factor are undercut by existing uses of similar algorithmic generation for planetary simulations. While the approach is ambitious, it doesn’t necessarily bring a groundbreakingly new or particularly engaging user experience element (in this documented stage).

Potential / Market Impact (3.0)

The project's concept ably addresses a current and growing area of interest (exoplanet discovery and characterization), particularly with AI-driven numerical modeling gaining traction. Its potential is evident, but understanding a market impact requires clearer demonstrations, user engagement, and possibly partnerships with observatories or research institutions. Currently, the market potential is recognized but not fully realized or demonstrated to commercialization or widespread adoption standards.

Overall Feedback / Recommendations

The project shows promising potential but appears to be in an early conceptual phase.

Positive Aspects:

- Strong, clear context and problem definition.

- Appreciable ambition to integrate AI for complex problem-solving.

Challenges & Recommendations:

- Progress from Idea to Prototype: To move from a conceptual stage to a evaluated and improved product, focus should shift from documentation to functional implementation. This might involve developing even a stripped-down, minimal version of the AI system that generates basic synthetic planetary systems and demonstrates its logic in a form (like a web app or a simple simulation) that users can interact with.

- Enhance User Experience: While functionality and user interface design are distinct activities, having a small interactive or simulation touchpoint would significantly improve the evaluation score for this category and could attract user or stakeholder interest more effectively than mere image links or references.

- AI Integration: Document how AI specifically amplifies capabilities past existing deterministic methods. Consider exploring generative models (like Generative Adversarial Networks or Variational Autoencoders) for system generation. Use AI to accelerate iterations and fine-tune variables in less computationally intensive ways, even if simplifications are needed for the demo phase.

Final Rating (Avg.)

**Average of scores: (3.0 + 2.0 + 3.0 + 3.0 + 3.0 / 5 **

Average = 2.8. Rounding appropriately to an even number, the overall rating would be 3.0, but to give more granular feedback, let's say 3.0 in a more progress-oriented sense, recognizing the project's conceptual ambitions and potential:

Overall Project Rating: 3.5 / 5 (Aspirational with clear potential but in early conceptual stages)

Given the right direction, investment, and focus on implementation and user experience, this project could rise significantly in rating. Currently, it’s poised at the confluence of idea formulation and prototype development—a great starting point if followed through on. The team should aim to enhance tangible output, particularly in AI application and user engagement, for future rounds, hackathons, or even market pursuit.

🅰️ℹ️ generated with APERTUS-70B-INSTRUCT

Screenshot from Celestia by TheLostProbe CC BY 4.0

Screenshot from Celestia by TheLostProbe CC BY 4.0